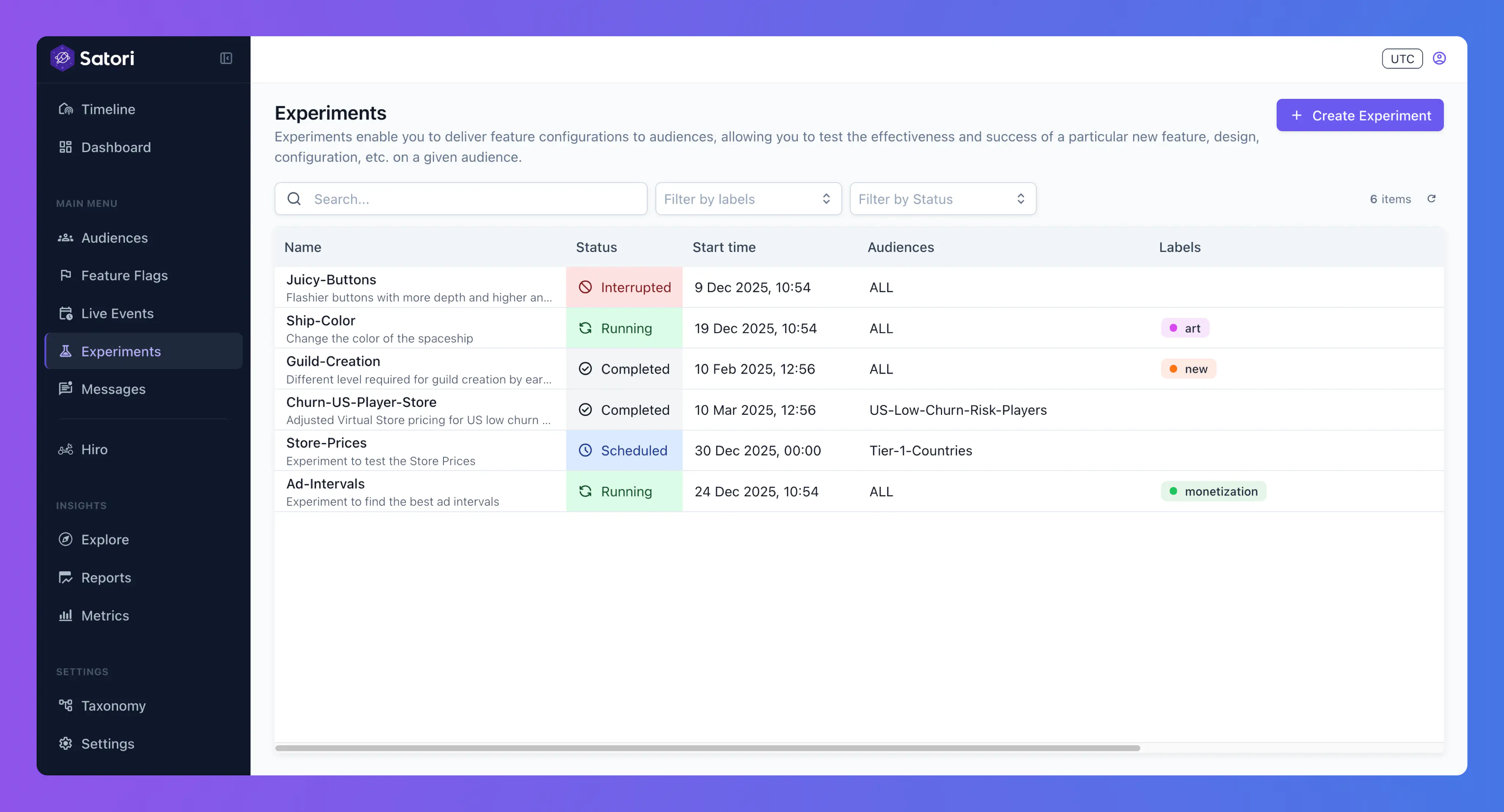

Experiments

Experiments enable you to deliver feature configurations to audiences, allowing you to test the effectiveness and success of a particular new feature, design, configuration, etc. on a given audience.

These differ from feature flags in that experiments are only for a fixed duration, are scoped to a particular audience, and are measurement-oriented - testing your assumptions about what change a new feature will drive in a given metric.

Use experiments in designing your game to work iteratively and make data-driven decisions. Successful experiments can then be converted to permanent feature flags for your players.

From the Experiments page in the Satori dashboard, you can create, edit, and delete experiments. You can review your existing experiments using a list view.

Audience assignment #

Identities are assigned to experiments based on their audience membership. Within any experiment, you can specify the audiences that will participate in the experiment, and how the identities in those audiences will be split across the experiment variants.

For example, you can create an experiment to test a new game mode on your non-spender audience. You can then specify a 50/50 split of the identities in non-spender audience to be assigned to the control variant or new-game-mode variant. This does not mean that the audience will be equally split between the two variants, rather for each individual identity in the audience there is a 50% chance that they will be assigned to the control variant and a 50% chance that they will be assigned to the new-game-mode variant.

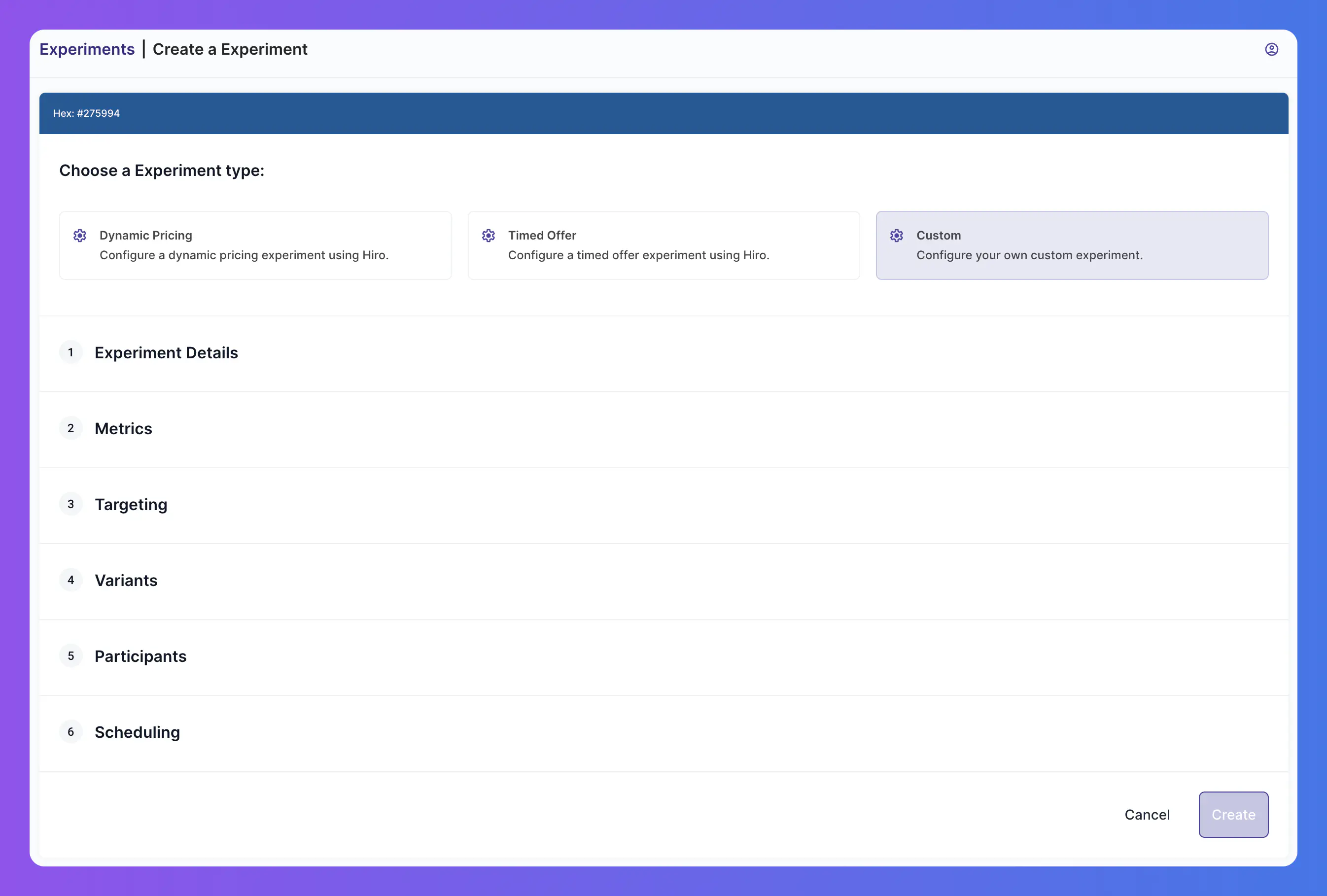

Creating experiments #

Click the + New Experiment button to open the Create Experiment wizard.

- Select the Experiment Type:

- Dynamic Pricing: A template experiment type for testing different pricing strategies using Hiro.

- Custom: A custom experiment type for testing any other type of feature or configuration.

- Offer Wall: A template experiment type for creating timed offers using Hiro.

- Provide the Experiment Details:

- Name: Enter a unique name for this experiment.

- Description: Provide a description of the experiment.

- Configure the Targeting for this experiment:

- Audiences: Select the audiences to participate in the experiment, or create a new audience.

- Exclude Audiences: The audiences, if any, to exclude from the experiment.

- Define the Metrics for this experiment:

- Goal Metrics: The metric you expect to be influenced by the experiment. You can create a new metric or select an existing one.

- Monitor Metrics: Additional metrics to monitor during this experiment. You can create a new metric or select an existing one.

- Configure the Variants for this experiment:

- Schema Validator: Select the validator to use for data from this variant. You can create a new validator or select an existing one.

- Affected Feature Flags: The feature flags associated with this variant. The feature flag values set here override the default values for the audience. You can create a new feature flag or select an existing one.

- Variants: Click + Add Variant to create a new variant for this experiment. Enter the following details:

- Variant Name: Enter a unique name for each variant.

- Value: Enter the game data value associated with this variant.

- Define the Participants for this experiment:

- Split Key: Select how identities will be split across the experiment variants, either:

- ID + Phase Start (dynamic): This is the default setting. Identities will be assigned to a variant based on their identity ID and the start time of the current phase. Variant assignments are stable across deletion and recreation of an identity.

- ID + Phase Start + Create Time (random): Identities will be assigned to a variant based on their identity ID, the start time of the current phase, and the time the identity was created. Variant assignments are not stable across deletion and recreation of an identity.

- ID (stable): Identities will be assigned to a variant based on their identity ID.

- Max Participants: The maximum number of identities that can participate in this experiment.

- Split Key: Select how identities will be split across the experiment variants, either:

- Set the Scheduling for this experiment:

- Start Time: The date and time when the experiment begins.

- End Time: The date and time when the experiment ends.

- Admission Deadline: The date and time after which no new participants can join the experiment.

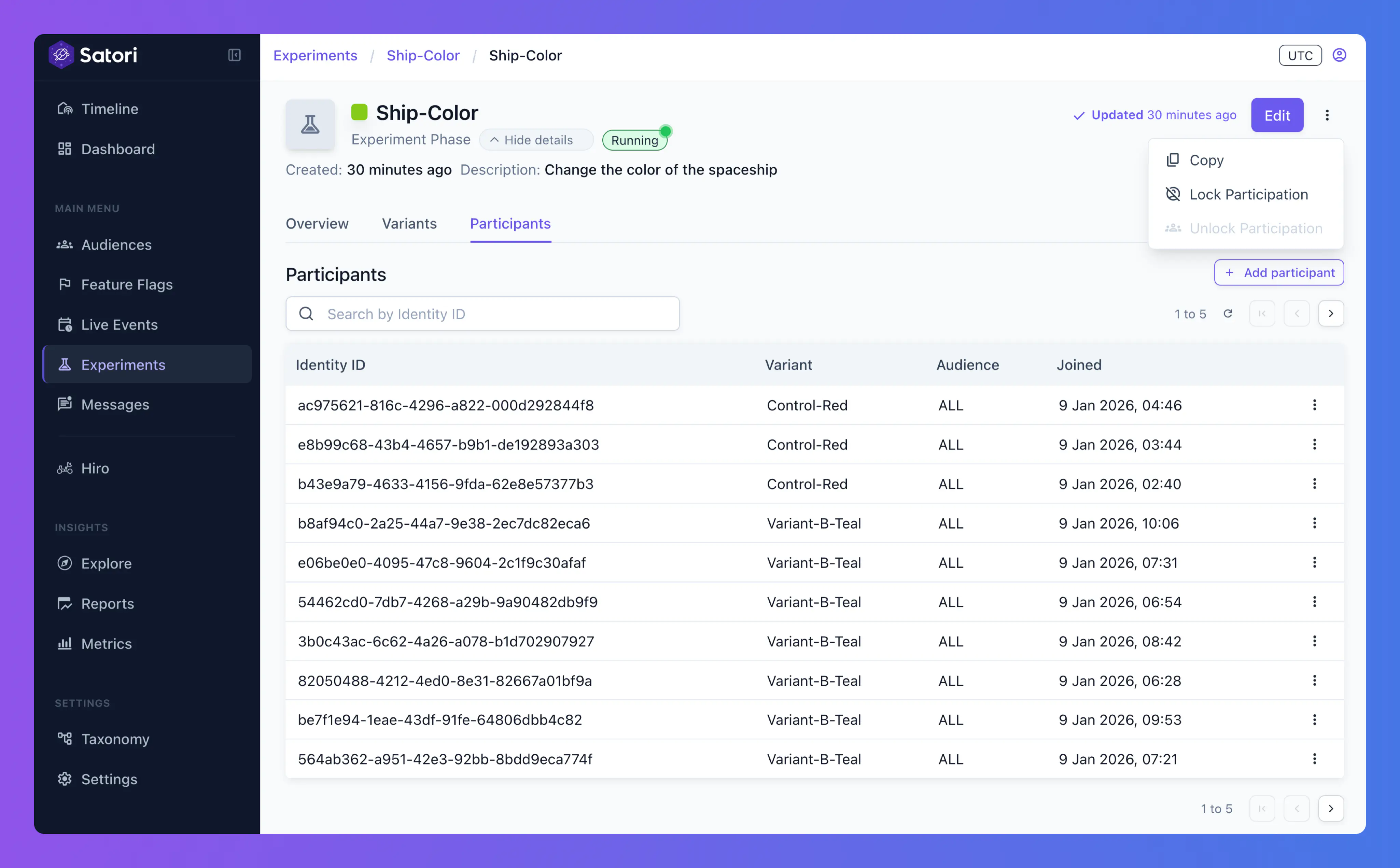

Copy Experiments #

For faster creation, experiments can be copied using the three-dot menu in the top right corner of the details.

Phases are not copied when an experiment is copied.

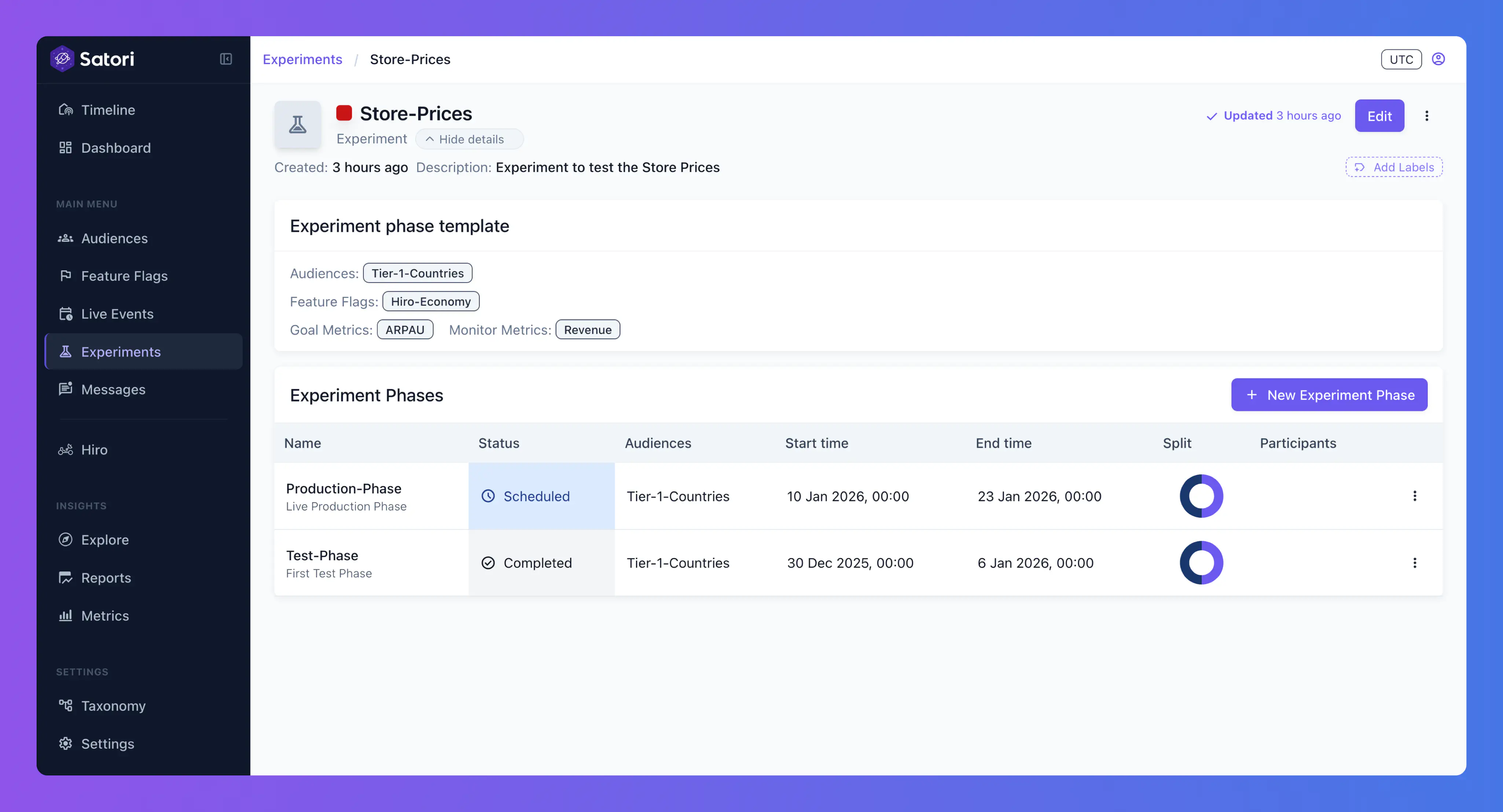

Experiment details #

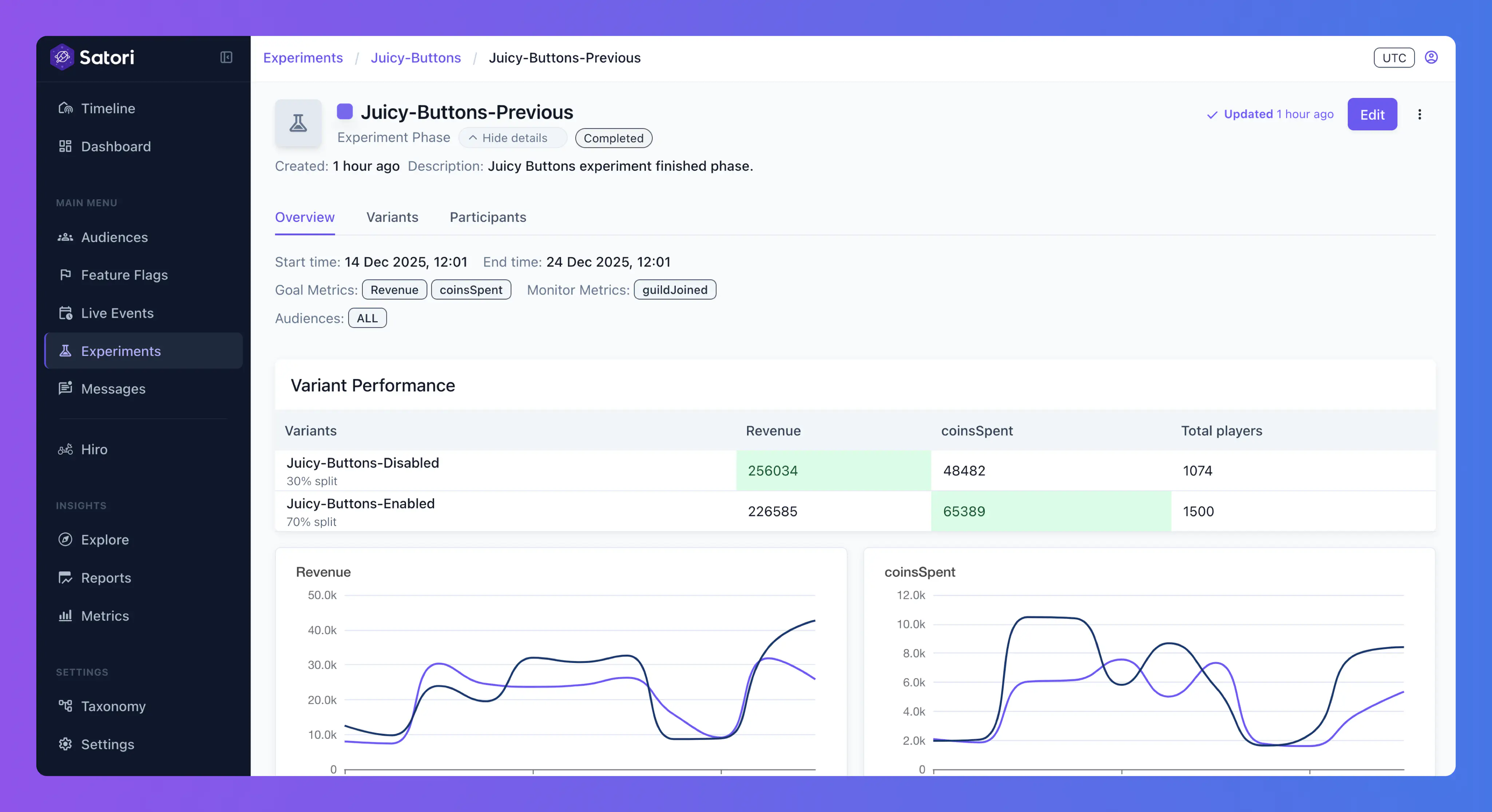

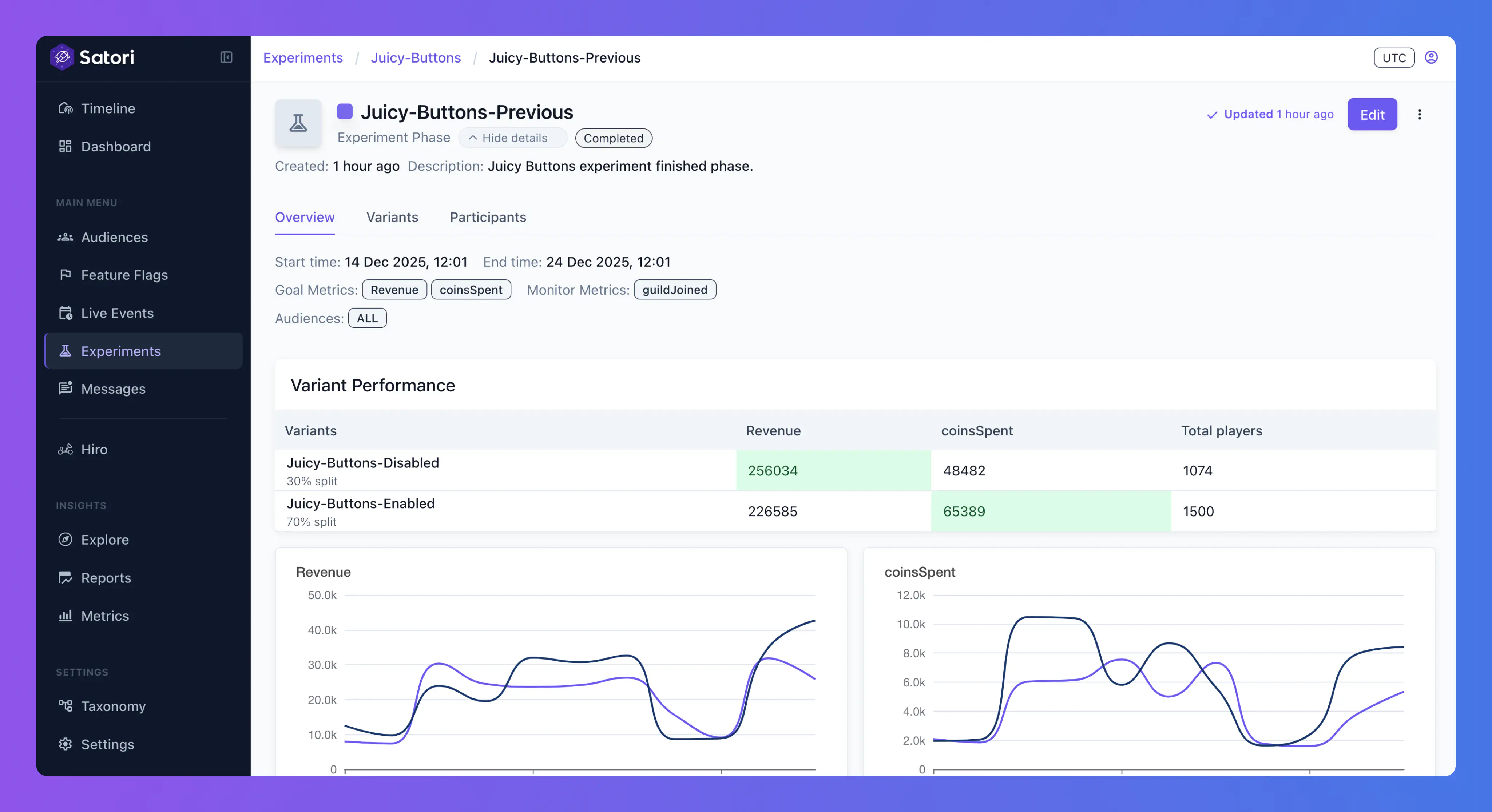

Select an experiment from the list to view its details page. This page contains details about the experiment, including its name, description, audiences, and metrics, as well as the phases and their status.

In this page you can also create new experiment phases and view the experiment’s current, previous and/or scheduled phases.

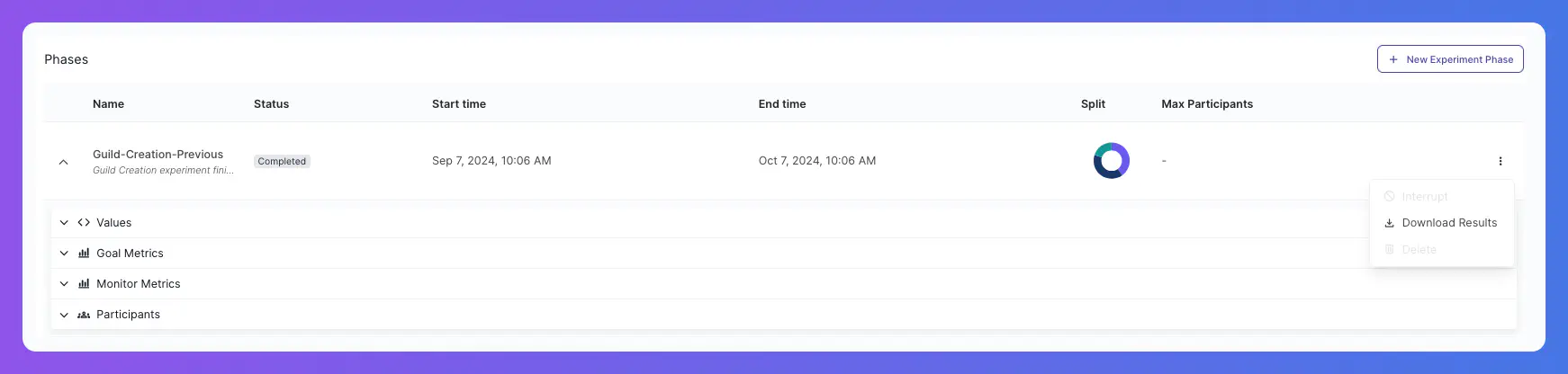

Phases #

Phases are used to control the variants that are active during a given time period for a given audience split.

You can click on any phase to view the changes - partial in the case of a still running phase - in the goal and monitor metrics for the experiment

Variants #

Variants are the different configurations of the phase that are tested on the audience. You can view the variants and their associated details, such as the schema validator, feature flags, and value, and edit them via the Edit Experiment button.

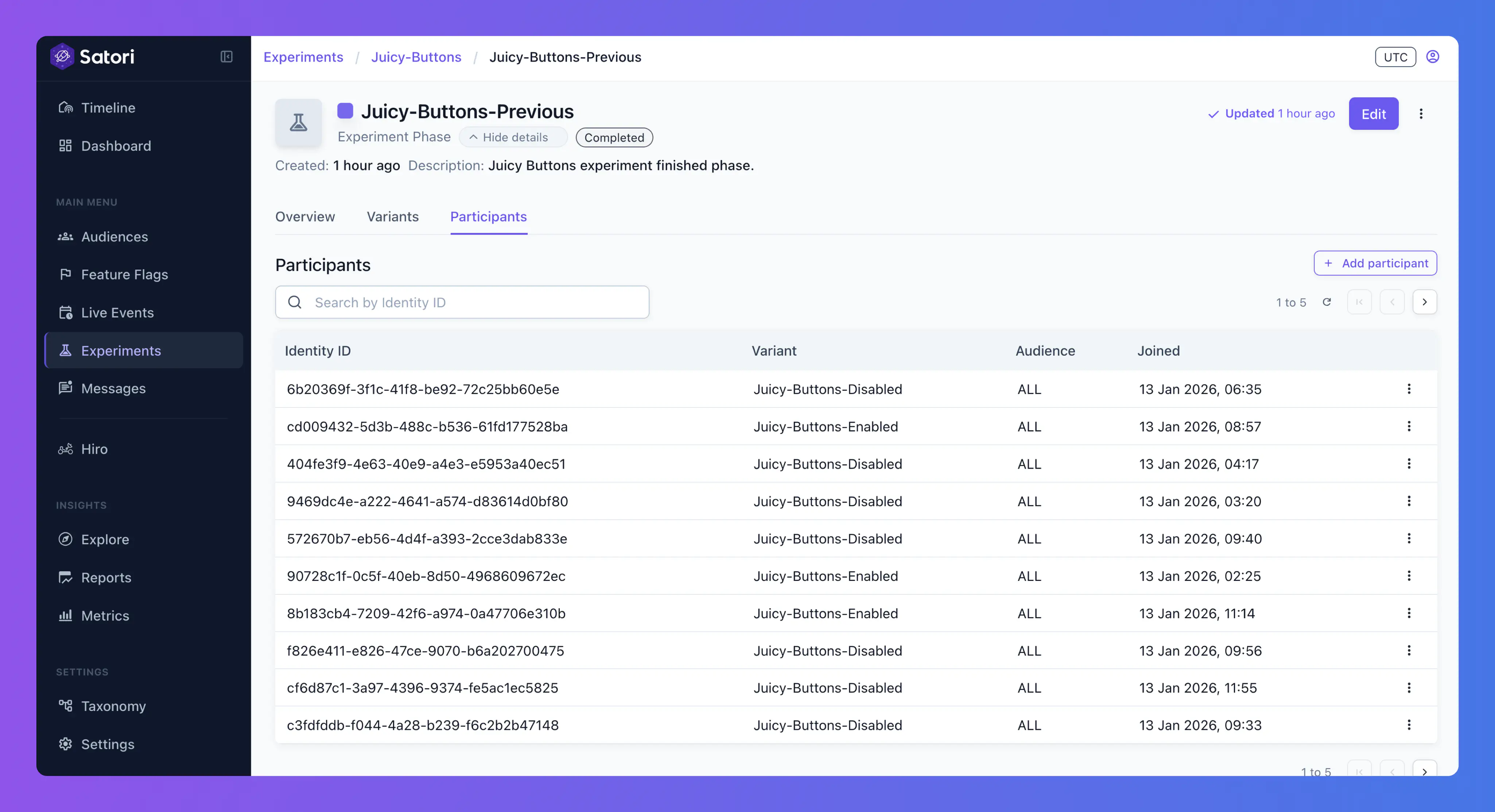

Participants #

In the participants page, you can view and edit the participants.

Lock Participation #

When an experiment is running, you can lock participation, using the three-dot menu in the top right corner of the page, in order to prevent more players from joining this experiment.

Copy Experiments Phases #

For faster creation, experiment phases can be copied using the three-dot menu in the top right corner of the details.

Further Analysis #

When an experiment ends you can see the results for the Goal Metrics, Monitor Metrics and the Participants. Additionally for a more in-depth analysis, you can download the experiments results onto a .csv file via the Download Results button.